Christophe Carugati

The European Union Digital Markets Act (DMA) bans large online platforms under its scope from treating their own products more favourably than rivals.

Executive summary

Large online platforms are intermediaries between end-users and business users. They sometimes propose their own products and services The European Union Digital Markets Act (DMA) bans large online platforms under its scope from treating their own products more favourably than rivals. alongside those of rivals. This can lead to platforms promoting their offers over those of competitors in so-called self-preferencing.

The European Union Digital Markets Act (DMA) bans large online platforms under its scope from treating their own products and services more favourably that those of rivals when ranking, crawling and indexing. Platforms – or gatekeepers in the DMA definition – should apply transparent, fair and non-discriminatory conditions when ranking products and services. However, the identification, detection, compliance with and monitoring of self-preferencing is complex and resource-intensive. It requires a case-by-case approach and access to and analysis of platform data and algorithms.

The DMA allows gatekeepers to promote their own products and services if rivals are subject to the same treatment. Nonetheless, the DMA neither defines equal treatment nor the main elements of self-preferencing, thus preventing platforms within the DMA’s scope from correctly applying the prohibition.

To ease enforcement, the European Commission should issue guidance on what constitutes self-preferencing under the DMA, outlining two main principles. First, gatekeepers should use objective and unbiased parameters to determine ranking, indexing and crawling. Second, gatekeepers should demonstrate equal treatment.

The guidance will make compliance easier. The Commission should then monitor compliance by appointing and ensuring sufficient rotation of external auditors to avoid capture by gatekeepers. The Commission should also work with competent national authorities that develop technological tools. When monitoring flags a risk of non-compliance, the Commission should then further specify the ban on self-preferencing on a case-by-case basis.

1 Introduction

Large online platforms act as intermediaries between business users and end users. Some also compete directly with business users by providing their own products and services, integrated into their core product offerings. Platforms can therefore have the ability and incentives to provide differentiated or preferential treatment to their products and services compared to that provided to rivals – a practice known as self-preferencing (Cabral et al, 2021).

An example of self-preferencing was the 2017 Google Shopping decision. The European Commission, the European Union’s competition enforcer, found that Google had promoted its own comparison shopping service via its general search engine, while demoting rival services (European Commission, 2017) (see section 4). In 2021, the EU General Court confirmed the Commission’s decision[1].

Self-preferencing distorts the competitive process by unduly promoting the platform’s products and services, excluding rivals.

Self-preferencing distorts the competitive process by unduly promoting the products and services of the platform, resulting in the exclusion of rivals. Several expert reports have found that self-preferencing is a common practice of large online platforms in digital markets, and poses significant competition issues (House of Representatives, 2020; Expert Group for the Observatory on the Online Platform Economy, 2021). However, the differential treatment might in some cases be justified objectively and might provide efficiencies to consumers, such as a higher-quality search engine[2]. In competition law, the practice thus requires the pro and anti-competitive effects to be weighed in an effect-based analysis on a case-by-case basis (Crémer et al, 2019).

The European Union’s Digital Markets Act (DMA), which entered into force in November 2022, prohibits self-preferencing, among other rules. The DMA applies to ‘gatekeepers’, or large, hard-to-avoid online platforms that mediate between business users and end users. The DMA’s self-preferencing prohibition (Art. 6(5) DMA) is subject to further specification by the Commission and does not allow gatekeepers to provide objective justifications showing that the practice generates pro-competitive effects. Therefore, there is no room for an economic discussion about whether self-preferencing is welfare-enhancing or welfare-reducing. Moreover, the prohibitions imply that identification and detection of potential differentiation by the Commission is sufficient to show that the gatekeeper might not be complying with the rule. The economic discussion is thus only relevant in relation to the proper identification and detection by the Commission of the practices addressed by the DMA. Failing to properly identify and detect will inevitably lead to costs for gatekeepers and consumers in case of over- or under-enforcement.

The ban on self-preferencing thus raises challenging practical implementation issues. As well as identifying and detecting practices amounting to self-preferencing, the Commission must propose compliance measures that it can monitor effectively. This policy contribution aims to contribute to the implementation of the DMA by providing background information on the self-preferencing ban, and recommendations on the identification, detection, compliance and monitoring of the practice, in order to avoid risks of over- or under-enforcement.

2 The DMA self-preferencing ban

The DMA defines self-preferencing narrowly, with negative and positive elements. According to the DMA Art. 6(5), the prohibition on self-preferencing means:

“The gatekeeper shall not treat more favourably, in ranking and related indexing and crawling, services and products offered by the gatekeeper itself than similar services or products of a third party. The gatekeeper shall apply transparent, fair and non-discriminatory conditions to such ranking.”

2.1 Negative elements in the definition

On the negative side, gatekeepers must not provide differentiated or preferential treatment to their products and services on their core platform services over competing products/services from third parties, through legal, commercial or technical means (Recital 52 DMA). However, this ban is limited strictly to treatment related to ranking, indexing and crawling.

Ranking relates to the presentation, organisation and communication of information about products and services and search results in various forms, including display, rating, linking or voice results[3].

The DMA also covers the upstream process that leads to ranking, namely crawling and indexing. Crawling refers to the discovery process of finding information, whereas indexing refers to the storage and organisation of the information found during the crawling process (Recital 51 DMA).

2.2 Positive elements in the definition

On the positive side, gatekeepers must provide treatment that is transparent, fair and non-discriminatory.

Transparency means that gatekeepers should provide information on the parameters that determine ranking, crawling and indexing.

The parameters must be fair. They should not create an imbalance in the rights and obligations between the gatekeepers and business users, through which gatekeepers obtain a disproportionate advantage, according to the definition of unfairness in the DMA (Recital 33 DMA).

Fairness implies a requirement to be non-discriminatory. In the specific case of differentiated treatment, non-discriminatory refers to the general principle of equal treatment, defined in case law as meaning “that comparable situations must not be treated differently and different situations must not be treated in the same way unless such treatment is objectively justified” (General Court, 2021). For instance, it implies that the ranking of similar products, such as hotels, must be subject to the same algorithmic treatment. In other words, fairness and non-discriminatory conditions imply the use of parameters that are objective and unbiased.

Therefore, the positive element implies that gatekeepers can promote their own services and products over rivals insofar as the treatment is objective and unbiased.

Gatekeepers cannot promote their own products and services based on a criterion related to the affiliation of the products or services with the gatekeepers – for instance, Google cannot promote its own comparison shopping service over rivals because the service is Google’s – but could promote their own offers based on parameters related to internet traffic. For instance, Google can promote its own comparison shopping service over rivals because Google’s service is the leading comparison shopping service in terms of internet traffic.

Both over and underenforcement will inevitably lead to costs for gatekeepers and consumers

The EU already imposes fairness and transparency requirements on online intermediation services and online search engines in relation to business users (in the 2019 regulation on platform to business (P2B) relations, Regulation (EU) 2019/1150). Among its requirements, the P2B regulation refers specifically to ranking (Art. 5 P2B) and requires online intermediation services and online search engines to set out in their terms and conditions the main parameters determining ranking and the reasons for the relative importance of those parameters as opposed to others. Gatekeepers must thus comply with the DMA in line with the P2B regulation and its accompanying guidelines on ranking transparency (European Commission, 2020).

However, the next section on the identification of self-preferencing shows that determining objective and unbiased parameters is complex.

3 Identifying self-preferencing

The identification of self-preferencing is complex because of the difficulties in defining objective and unbiased parameters, and the need for the European Commission to access and analyse data and algorithms.

3.1 Definition of objective and unbiased parameters

The Commission can identify whether gatekeepers self-preference their products or services by assessing the parameters used for ranking, crawling and indexing. In line with the P2B regulation, gatekeepers should describe the parameters to the Commission in plain and intelligible language. As noted in section 2.2, the parameters should be objective, unbiased and in compliance with the P2B regulation. However, assessing whether parameters are objective and unbiased is complex for two main reasons.

First, the assessment requires a case-by-case by product/service approach. As noted by the European Commission (2020) in its guidelines on ranking transparency, the main parameters used for ranking should be defined for each service separately. In other words, the Commission cannot take a holistic approach to identify self-preferencing.

Second, the assessment is inevitably subjective. The Commission must assess whether the parameters determining ranking, crawling and indexing are objective and unbiased. However, the choice of parameters is inherently subjective as it results from internal reflection by the gatekeepers, aiming to ensure equal treatment between themselves and rivals (Box 1).

The example in Box 1 shows the difficulties in identifying self-preferencing regarding only one parameter, price, that is objective but might be used to bias the ranking in favour of the gatekeeper’s own products/services, thus preventing the correct identification of self-preferencing. In practice, the Commission will face even more challenging issues as the parameters determining rankings are often the sum of several criteria, including personalisation based on personal data (guidance on ranking transparency). The Commission thus cannot assess them in isolation. Some factors will inevitability exemplify differentiation, such as personalisation, as the goal of ranking is to provide the information that users are looking for (Expert Group for the Observatory on the Online Platform Economy, 2021). The Commission will thus inevitably have to accept trade-offs in determining whether the parameters are unbiased and, therefore, non-compliant with the DMA.

Box 1: Example of the choice of price as an objective and unbiased parameter

Suppose that the e-commerce platform Amazon is a gatekeeper under the DMA and chooses price as an objective and unbiased parameter to rank its products and those of rivals on its e-commerce platform. Suppose that the parameter promotes Amazon’s products in the first place because they are cheaper than those of competitors. Amazon might have the incentive to choose price because it knows its products and services are cheaper than those of rivals because of various economic factors, such as economies of scale (subjective consideration).

In that instance, the Commission and third parties might interpret the parameter as objective but biased so that it intentionally promotes Amazon’s products (Cabral et al, 2021). By contrast, Amazon might choose this criterion because it is a relevant parameter expected by its end users and business users in order to find products and services (objective consideration). In that situation, the Commission might interpret the parameter as objective and unbiased.

In other words, irrespective of whether the Commission only focuses on the “main parameters” as required by the P2B regulation, or all parameters, the assessment might lead the Commission to identify practices of self-preferencing when there are not, leading to over-enforcement. By contrast, the Commission might fail to identify practices of self-preferencing, leading to under-enforcement. Both over and under-enforcement will inevitably lead to costs for gatekeepers and consumers. The Commission could assess the internal reflections of the gatekeeper through the reasoning that gatekeepers should provide in line with the P2B regulation. However, the Commission will lack the data that leads gatekeepers to choose one parameter over another.

Therefore, it cannot evaluate whether the decision is driven by objective or subjective considerations to promote the gatekeepers’ products or services. Moreover, the analysis of the incentive for self-preferencing, and its economic literature, are irrelevant in the context of the DMA, because the DMA imposes rules irrespective of whether the gatekeeper has the ability and incentive to implement them.

3.2 Access to and analysis of data and algorithms

Ranking is a data-driven algorithmic decision-making process (para. 11, guidelines on ranking transparency, European Commission, 2020). Accordingly, gatekeepers should, in line with the P2B regulation, provide an adequate understanding of the main parameters determining rankings (Art. 5(5) P2B). The description should be based at least on “actual data on the relevance of the ranking parameters used” (Recital 27 P2B). Moreover, the description does not require gatekeepers to “disclose algorithms or any information that, with reasonable certainty, would result in the enabling of deception of consumers or consumer harm through the manipulation of search results” (Art. 5(6) P2B). In other words, gatekeepers must only provide a general description of the main ranking parameters based on actual data.

Nonetheless, the Commission can request access to and conduct inspections into gatekeepers’ data and algorithms to monitor compliance with the regulation. In particular, they can request that gatekeepers explain and provide information about the tests gatekeepers did to rank products and services (Arts. 21, 23 and Recital 81 DMA).

In practice, the Commission could thus audit data and algorithms to identify self-preferencing. While useful for rebalancing asymmetric information, the audit does not allow for the absence of identification errors and perfect information for two main reasons.

First, the design of fair algorithms is impossible. Algorithms inherently present various biases, including biases in data and algorithm development (Tolan, 2018). If price is chosen as an objective parameter, for example, bias could arise because price is the outcome of various cost factors and large platforms might offer lower prices than rivals because of economies of scale (Box 1). Gatekeepers could thus select parameters that intentionally favour their products and services. However, several cost factors constitute elements of a price, they are generally not observable by a third party, and the counterfactual scenario is never observable because it does not exist. The Commission will thus have difficulty identifying whether the gatekeeper chooses price in order to develop an algorithm that self-preferences its own products and services.

Second, the explanation is not perfectly observable. The gatekeeper possesses the information underlying the choice of a parameter. A gatekeeper would probably explain that it selects price, for example, because price is observable by all stakeholders, is quantifiable and is a parameter of competition that end users want to know to buy products and services. The explanation seems totally objective and fair. However, the gatekeeper might not explain that price is a relevant parameter because it has a competitive advantage over rivals in terms of price. Therefore, the Commission might not know all the information that leads gatekeepers to select a given parameter.

In summary, the Commission can only identify self-preferencing in relation to a list of objective and unbiased parameters that it should develop to ensure legal certainty. It can take inspiration from the list given by the guidance on ranking transparency. Moreover, considering the different information access gatekeepers and the Commission have, the Commission should require gatekeepers to show that the parameters they choose are objective and unbiased based on independent testing. This should involve removal of alleged unbiased parameters, such as price or the affiliation of the products or services with the platform. This will minimise risks of under- or over-enforcement, and reduce the burden of the Commission in identifying self-preferencing.

4 Detection

The detection of self-preferencing is even more complex than identification because of the difficulties in defining differentiated or preferential treatment, and the involvement of algorithms.

4.1 Definition of differentiated or preferential treatment

The DMA states that gatekeepers must not treat their products or services more favourably than their rivals. However, the text does not define “more favourably”. The recitals only refer to “better position” (Recital 51 DMA) or “prominence” (Recitals 51 and 52 DMA). But nor does the text define those terms. It only notes that prominence includes the display, rating, linking or voice results and the communication of only one result to the end user. The legislators probably did not define the terms to ensure that the Commission can catch all forms of self-preferencing and not those limited to gatekeeper displaying and positioning its own offerings on top of a search result. The problem is that the absence of a definition makes it harder to detect self-preferencing.

So far, the Commission has only found practices of self-preferencing in the Google Shopping case (see section 1). In this self-preferencing case, the Commission argued Google had positioned its comparison shopping service so it was always at the top of Google’s generic search results. The Commission also argued Google’s comparison shopping services was displayed in a box with richer graphical features, including images. Rivals were not able to receive the same treatment, and in fact were demoted to the bottom of the first page or subsequent pages of search results by Google’s algorithms. Moreover, rivals were only displayed as generic results in the form of simple linked text without graphical features.

The Commission did not dispute the pro-competitive rationale arising from Google displaying in richer format its product results to improve the quality and relevance of its general search service for the benefit of users. However, the Commission considered the practice anti-competitive because Google’s comparison shopping service and those of rivals were not displayed in the same format and not positioned in the same rank as a result of being subject to different algorithms.

The Commission ordered Google to cease its practices and offer equal treatment. Google did this by including rival comparison shopping services in its Google shopping unit, so they can appear in the unit alongside the Google shopping service. So far, the Commission has considered that Google is complying with the remedy, despite allegations from rivals that it does not comply.

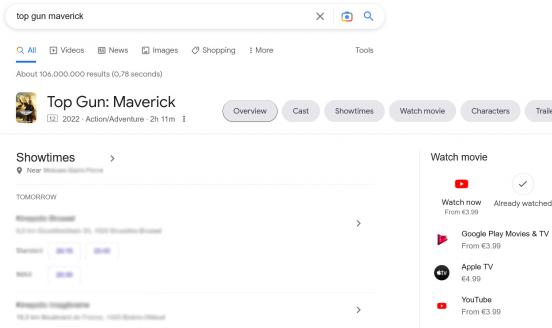

The takeaway from the Google Shopping case is that gatekeepers can display in an enriched format products and services in a prominent position insofar as rivals are treated the same. This could mean a gatekeeper offering a box that displays and positions rivals’ content in the same way as the gatekeeper’s content (Figure 1).

Figure 1: Result of a Google search for the movie Top Gun: Maverick

Source: Bruegel (accessed 7 November 2022).

Figure 1 shows that for a movie query, Google displays and positions its content and those of its rivals in the same way, but Google Play Movie is the first option because it offers a lower price than Apple TV. It is hard to detect whether Google self-preferences its products for two reasons:

Who the rivals are: are they providers of comparison movie services, such as JustWatch, or providers of movies, such as Apple? It is unclear from the query what the user wants to find: a comparison movie service, information on the movie or where to watch it.

Whether Google positions itself better compared to rivals: as detailed in section 3.1, price may not be an objective and unbiased parameter, especially considering that price can be the same for several providers, as Figure 2 shows, with data from JustWatch on the same query as in Figure 1.

Figure 2: Result of a search on JustWatch for the movie Top Gun: Maverick

Source: Bruegel (accessed 7 November 2022).

In sum, the example shows that in the absence of a definition of differentiated or preferential treatment about what “more favourably” means and in relation to which rivals, the Commission will face difficulties detecting the most ingenious forms of self-preferencing, and gatekeepers might not know whether their practices comply with the DMA. In the example, Google must make a costly economic trade-off. It might decide to continue its practice, but at the risk that it is not compliant with the DMA. Or it might choose to discontinue its practice, at the risk of losing a competitive advantage over rivals (see section 5.2).

4.2 The practice involves algorithms

The DMA does not state how firms must not implement self-preferencing. However, the recitals state that it should not be done through legal, commercial or technical means (Recital 52 DMA).

In practice, most forms of self-preferencing will include technical means, as ranking is a data-driven algorithmic decision-making process. In other words, self-preferencing will involve algorithms. However, neither the P2B regulation nor the DMA require gatekeepers to disclose algorithms. It will thus be harder for third parties and the Commission to detect practices of self-preferencing without accessing algorithms.

In the Google Shopping case, the Commission found that Google self-preferenced its comparison shopping service because it displayed and positioned its service more prominently and demoted rivals by applying adjustment algorithms that did not apply to Google’s service[4]. While the first part of the practice – the promotion – may be visible, the second part – the demotion – may not.

In this case, to identify demotion of results, the Commission would need to access and analyse the algorithms. They will probably need an in-depth investigation to understand how the algorithms work and why gatekeepers use different algorithms. One reason could be that a box displays specific information that general search results do not display, thus requiring a specific algorithm to collect and process information. In the example illustrated in Figures 1 and 2, suppose that rivals are competing comparison movie services. It is evident that the box (Figure 1) displays specific information that is not displayed for competitors in search results. However, it is far from evident whether Google demotes rivals because the box also displays information that comparison movie services do not display, such as user reviews.

In sum, the Commission will need to access and analyse the algorithms and identify whether gatekeepers demote rivals by using algorithms. Thus, the Commission will have to define demotion and inevitably assess whether the rationale for using different algorithms is to self-preference or display information in an enriched format. This will require a case-by-case analysis for each practice.

The Commission can only detect self-preferencing in relation to the concepts of “more favourably” and “rivals”. It should give a list of indications about how it will identify “more favourably” and “rivals” to ensure legal certainty. The “more favourably” list could include examples, such as the Google Shopping case. “Rivals” could be defined in relation to the main function of the product/service.

5 Compliance

Compliance with the ban on self-preferencing requires a case-by-case approach that preserves pro-competitive features.

5.1 Case-by-case approach

The DMA does not define how gatekeepers must comply with the ban on self-preferencing. The reason is that the DMA is self-executing by nature: gatekeepers must show they comply with the regulation. The DMA thus shifts the burden of proof from the Commission to the gatekeepers. This is particularly relevant as digital cases are complex and require significant information, on which the Commission is systematically in a less-favourable position than gatekeepers (Cabral et al, 2021).

The problem is that in having to demonstrate compliance, gatekeepers can comply according to their expectations and then capture the regulation for their own benefit. Rules in the DMA, including the ban on self-preferencing, are therefore subject to further rulemaking by the Commission to prevent this and tailor the rules to each practice (Arts. 6 and 8, Recital 65 DMA). The Commission can thus dictate how gatekeepers should comply, according to its own compliance expectations. The DMA further mentions clearly that the Commission can tailor the rules where the implementation of an obligation depends on variations of services, which is typically the case with self-preferencing.

The variety of self-preferencing practices implies that the Commission cannot impose general requirements but only tailor them to the specificity of each practice. However, doing so will require human resources, in-depth knowledge of the gatekeeper and the practice, and time. This is particularly problematic considering the limited human resources in the Commission’s competition team in charge of enforcing the DMA (Carugati, 2022). The Commission is thus unlikely to specify how gatekeepers should comply with the ban on self-preferencing for each practice.

Nevertheless, the Commission can adopt guidance on how gatekeepers should comply with the obligations in a way that ensures equal treatment (Art. 47 DMA).

5.2 Safeguard pro-competitive features

The DMA aims to promote innovation, high-quality digital products and services, fair and competitive prices, and high quality of choice for end users (Recital 107 DMA). Thus, the DMA aims to promote innovative pro-competitive features from gatekeepers or rivals.

Therefore, the Commission should not impose compliance measures that undermine pro-competitive features. However, some rivals might lobby to remove pro-competitive features on the basis of allegedly suffering harm from them.

Rivals and plaintiffs in the Google Shopping have already asked the Commission to use the DMA to remove Google’s shopping unit, namely the display of product results in enriched format[5]. Such removal would prevent innovation and harm consumers. Indeed, Google has argued, and the Commission has not disputed, that it displays its Google shopping unit (comparison shopping service) to improve the quality of search results and to compete with competing search engines[6]. The removal will thus lead to lower quality, less innovation and less competition to the detriment of end-users, in contradiction with the aims of the regulation.

6 Monitoring

Monitoring the ban on self-preferencing requires constant information on the parameters, following reporting by gatekeepers and third parties.

6.1 Constant information on the parameters

The DMA works in tandem with the P2B regulation, which requires gatekeepers to keep up to date with the description of the main parameters determining ranking, and to inform business users of any change (European Commission, 2022).

The requirement in the P2B regulation thus implies constant monitoring of the information on the main parameters. The Commission can rely on the obligation under the P2B in order to monitor compliance with the ban on self-preferencing.

6.2 Reporting by gatekeepers and third parties

The DMA obliges gatekeepers to report yearly to the Commission on how they implement measures to comply with the regulation (Art. 11 and Recital 68 DMA). The Commission could thus rely on the report to monitor whether gatekeepers comply with the ban on self-preferencing.

However, this would mean the Commission relying on the information from gatekeepers and not from third parties, which are likely to provide information the gatekeepers will not present. Also, gatekeepers’ reports are updated yearly, whereas gatekeepers can change their practices in the meantime. Annual reports are thus unlikely to reveal up-to-date and complete information on how gatekeepers effectively comply with the regulation.

The Commission should also thus rely on information from third parties about any practice or behaviour by gatekeepers (Art. 27 DMA).

After receiving such information, the Commission could then monitor the obligations and the measures adopted by appointing independent external experts and auditors and requesting the assistance of competent national authorities (Art. 26(2) DMA). The appointment of external auditors is particularly relevant because self-preferencing requires an audit of data and algorithms, as noted in sections 3 and 4 (Cabral et al, 2021).

7 Recommendations

The enforcement of self-preferencing is complex and requires in-depth legal and technical expertise and significant human resources. Enforcement is thus far more than the simple observation of self-executing provisions. It will be instead the outcome of intense and difficult steps of identification, detection, compliance and monitoring. The Commission should do the following to ease these steps.

The Commission should issue guidance on what constitutes self-preferencing under the DMA. The guidance should contain at least two general principles on how firms should comply with the DMA:

First, gatekeepers should use objective and unbiased parameters when determining ranking, indexing and crawling. Gatekeepers should describe them in plain and intelligible language in line with the P2B regulation. The Commission should define what constitutes objective and unbiased parameters (section 3). Gatekeepers should then explain why their parameters are objective and unbiased, given their products and services, and those of rivals. This first principle should ensure proper identification of self-preferencing.

Second, gatekeepers should demonstrate equal treatment. The Commission should define the key concepts of the ban on self-preferencing, including “more favourably” and “rivals” (see section 4). Gatekeepers should then show that their products and services, and those of rivals, are subject to the same algorithmic treatment, especially in relation to display and positioning, and if they are not, should explain why such differentiated treatment is necessary to provide products and services. This second principle should ensure proper detection of self-preferencing.

The guidance will ease compliance with the prohibition on self-preferencing. The Commission should then monitor compliance by appointing and ensuring the rotation of external auditors to prevent them from being captured by gatekeepers (Recital 85 DMA) (Cabral et al, 2021). The Commission should ensure auditors have proper access to data and algorithms. The Commission should also appoint competent national authorities that develop technological tools[7]. When monitoring flags a risk of non-compliance, the Commission should further develop and explain the ban on self-preferencing on a case-by-case basis.

References

Cabral, L., J. Haucap, G. Parker, G. Petropoulos, T. Valletti and M. Van Alstyne (2021) The EU Digital Markets Act A Report from a Panel of Economic Experts, European Commission Joint Research Centre, Luxembourg: Publications Office of the European Union

Carugati, C. (2022) ‘How the European Union can best apply the Digital Markets Act’, Bruegel Blog, 4 October, available at https://www.bruegel.org/blog-post/how-european-union-can-best-apply-digital-markets-act

Crémer, J., Y.-A. De Montjoye and H. Schweitzer (2019) Competition policy for the digital era, European Commission, Directorate-General for Competition

European Commission (2020) ‘Commission Notice Guidelines on Ranking Transparency Pursuant to Regulation (EU) 2019/1150 of the European Parliament and of the Council’, 2020/C 424/01, available at https://eur-lex.europa.eu/legal-content/EN/TXT/HTML/?uri=CELEX:52020XC1208(01)&from=EN

Expert Group for the Observatory on the Online Platform Economy (2021) Work stream on differentiated treatment, progress report, European Commission

Tolan, S. (2018) ‘Fair and Unbiased Algorithmic Decision Making: Current State and Future Challenges’, JRC Digital Economy Working Paper 2018-10, European Commission Joint Research Centre

US House of Representatives (2020) Investigation of Competition in Digital Markets, Majority Staff Report and Recommendations, Subcommittee on Antitrust, Commercial and Administrative Law of the Committee on the Judiciary

Footnotes

[1] An appeal against the General Court ruling is pending in the EU Court of Justice. The General Court ruling is Google and Alphabet v Commission (Google Shopping), T-612/17, http://curia.europa.eu/juris/documents.jsf?num=T-612/17.

[2] For instance, a search engine provider might claim that it offers a higher-quality search engine because it provides a specialised search engine integrated into its general search engine that shows specialised results in an enriched format in a box with specific information.

[3] The DMA (Art. 2(22)) defines ranking as “the relative prominence given to goods or services offered through online intermediation services, online social networking services, video-sharing platform services or virtual assistants, or the relevance given to search results by online search engines, as presented, organised or communicated by the undertakings providing online intermediation services, online social networking services, video-sharing platform services, virtual assistants or online search engines, irrespective of the technological means used for such presentation, organisation or communication and irrespective of whether only one result is presented or communicated.”

[4] Paragraph 372, case T‑612/17; see footnote 1.

[5] Natasha Lomas, ‘Google antitrust complainants call for EU to shutter its Shopping Ads Units’, 18 October 2022, TechCrunch, https://techcrunch.com/2022/10/18/eu-antitrust-complaint-google-shopping-units/.

[6] Paragraphs 250-252 of case T‑612/17; see footnote 1.

[7] For instance, in France, the Digital Platform Expertise for the Public (Pôle d’Expertise de la Régulation Numérique, PEReN) initiative has developed a tool to monitor the evolution of platforms’ terms and conditions. To help monitor self-preferencing, the Commission could request the assistance of PEReN to develop a similar tool to monitor the evolution of the description of the main parameters determining ranking, indexing and crawling. Similarly, PEReN is developing a tool to audit black-box algorithms to reduce processing costs incurred by regulators and platforms when accessing and analysing algorithm source code. Such a tool could help the Commission understand gatekeepers’ algorithms in relation to self-preferencing. See https://www.peren.gouv.fr/en/projets/.